The impact of AI on jobs has been a hot topic at last week’s annual World Economic Forum meeting in Davos, with IMF managing director Kristalina Georgieva revealing that almost 40% of jobs around the world will be impacted by the advancement of AI. Even though its effect on emerging markets is predicted to be slightly lower than in advanced economies, South Africa is bound to see some shifts in the employment sector as a result of AI adoption.

Henda Scott

Naturally, it raises some serious questions and concerns around the application of this kind of technology and its potential to ‘replace’ humans, or ‘steal our jobs’ However, it’s important to note that the impact won’t always be negative. In fact, it’s estimated that around half of the jobs impacted will actually benefit from the introduction and implementation of AI.

Time has already shown that even GenAI has its pitfalls and isn’t going to make us all redundant just yet. Being pro-AI doesn’t mean you’re anti-human. And being pro-human shouldn’t mean you’re anti-AI either. Without us, AI wouldn’t exist. And without AI, many of our processes would take a whole lot longer.

Artificial intelligence is the simulation of human intelligence, but that doesn’t necessarily mean the one supersedes the other. At Helm, our goal has always been to use technology to assist – and not replace – people, which is why we believe in developing human-first, AI-powered solutions and experiences.

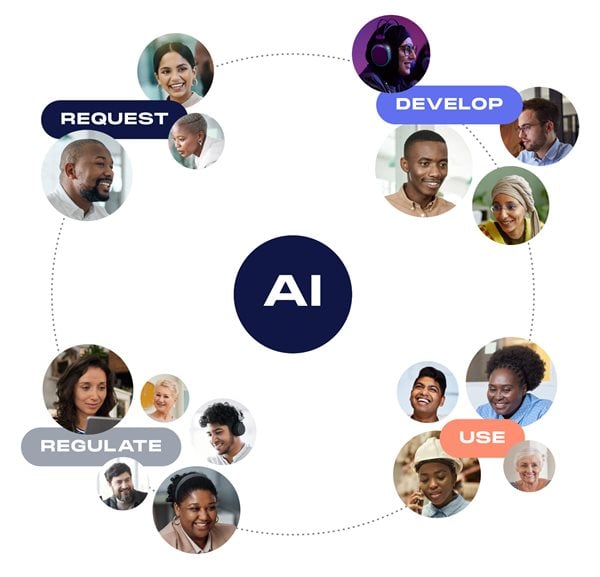

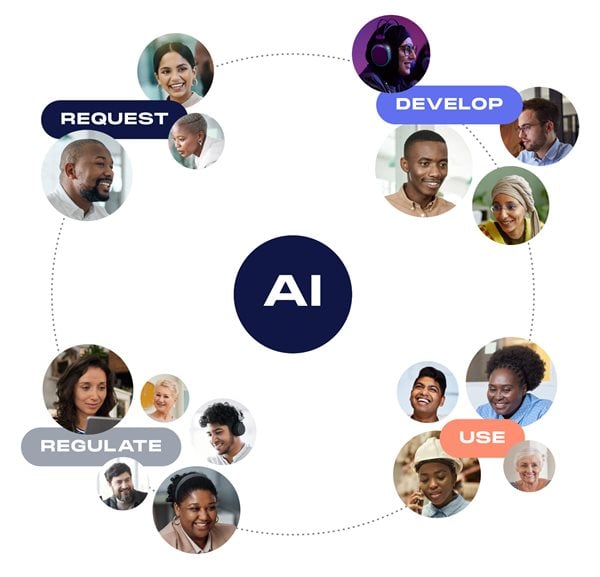

For most of us currently working in AI, the goal is to make it more human-like, and one of the best ways to emulate our behaviour is to engage real people. Human input – in the form of intention, innovation, implementation and intervention – is crucial in order to allow the technology to evolve. So, who are the real people who engage with AI, and how does their involvement shape its evolution?

First up, we have those who request AI – typically our clients. These are the humans who know just enough about the tech to know they want it, but not enough to know if they actually need it. When an AI solution is requested by a potential client, our approach is always to first determine their business challenges in order to establish whether AI can really solve the problem. An In-depth understanding and ethical reasoning are crucial and must precede any implementation – and AI models don’t come cheap, at least not at the moment. Using AI to solve a problem that could’ve been fixed through a number of alternative, simpler solutions can result in a waste of expenditure, as well as precious and limited resources.

One such resource is our team of engineers and designers, who work together to develop AI solutions for our clients and their customers – that is, after we’ve established that AI is the answer. Once we’ve done that, figuring out exactly which solution will be best for the problem at hand is often a collaborative effort, with the end goal being the creation of an interface where the end-users can engage with it.

There are so many points at which various humans engage with AI, and it’s thanks to their input that AI is able to learn and evolve. As the developers and designers of human AI experiences, we have the power to direct the flow of information and guide the user to meet AI at the most opportune point, where the right information has been captured and processed to procure the right outcome. While the developers of the technology focus on optimising functionality and performance to simulate human reasoning, those who design the experience do it in a way that attempts to emulate a true human interaction (whilst always remaining transparent about the fact that the user is, in fact, not engaging with a real person).

But this isn’t where our involvement or responsibility stops. For us, as Africa’s CX innovation experts, the humans who use AI are arguably the most important. As the custodians of the point where they inevitably meet AI, we are responsible for managing all aspects of their experience, from initiation to completion. Not only do we want to create AI solutions that help them, we have to make sure our solutions do not cause any harm (albeit unintended) to them, their livelihoods or their environments.

While many aspects of AI are already governed by existing cyber security, data protection and privacy laws, we recognise that AI technology is developing much faster than legislation can be passed. In addition, we believe the evaluation and regulation of our AI solutions should extend beyond what is lawful to encompass what is ethical as well. This is why it’s important for us to ensure that those who monitor and regulate AI are involved in every step of the development process – from inception to implementation and in every iteration that follows. Having these humans involved from the get-go means we are able to identify potential pain points and implement preemptive steps to mitigate these, or even completely reimagine a solution altogether if it poses a potential risk.

By making the artificial feel real, we can showcase the power of AI and its potential application to solve real-world problems. By removing some of its perceived threats, we can change negative perceptions around the tech and encourage more humans to engage with it. AI is showing no signs of slowing down and as AI adoption in Africa continues to soar, so will the need for real accountability. And while we believe in ceding as many tedious tasks as possible to machines, making sure AI is used lawfully, ethically and responsibly will always remain our human responsibility.